Data Systems using Azure

Summary

I have been working with Azure cloud services for the past 1-2 years, complemented by the acquisition of two Microsoft Certificates: Azure Fundamentals and Azure Data Engineering. In this post, I will highlight a few pivotal projects where I played a central role. This exposition is less of a guide but rather a comprehensive display illustrating the integration of these services to accomplish each project’s specific objectives.

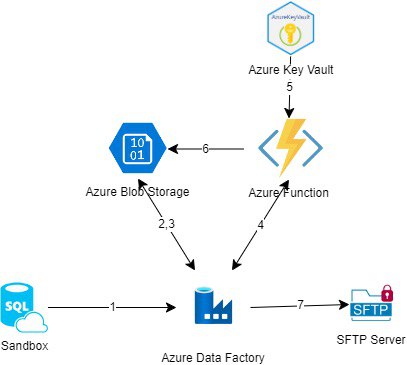

SFTP Architecture

The primary aim of this project was to establish a data system capable of automating the encrypted file transfer to a commercial partner through SFTP. It was imperative that the transferred data remained encrypted throughout the process.

-

Database (Sink)

Data essential for batch transfer underwent various transformations for business use within the data warehouse before being loaded onto this server at regular batch intervals. This server acted as the source for retrieving data for our data system.

-

Azure Data Factory (Transformation)

Azure Data Factory (ADF) served as the central pipeline orchestration tool for executing batch data transfers. ADF played a crucial role in integrating multiple services to fulfill the project’s objectives. Its primary functions encompassed:

- Adapting data to align with the format required by our commercial partner’s existing SFTP server infrastructure.

- Transmitting the finalized, encrypted, and formatted files to the destination SFTP server.

-

Azure Blob Storage (Staging)

Transformed data was stored as blob files in Azure Blob Storage, functioning as an interim staging area.

To maintain a clear demarcation between encrypted and unencrypted data, encrypted data was stored separately, facilitating a more transparent debugging process. Staging data twice allowed pinpointing issues from the unencrypted files onward, bypassing the data transformation process.

Additionally, each batch’s encrypted AES keys were stored within this storage environment.

-

Azure Function (Encryption)

Adhering to security requirements mandating encryption at rest and in transit, a 2-stage hybrid encryption employing RSA and AES was implemented on the data files themselves.

While Azure ensures encryption at rest and in transit, the intricacies of hybrid encryption demanded a custom solution. Leveraging Azure Function, the encryption logic was managed and deployed using the python V2 programming model.

-

Azure Key Vault (Secure keys)

RSA key certificates were securely stored within the Azure Key Vault. The Azure Function accessed these keys solely during the encryption process, guaranteeing the constant protection and security of the RSA key.

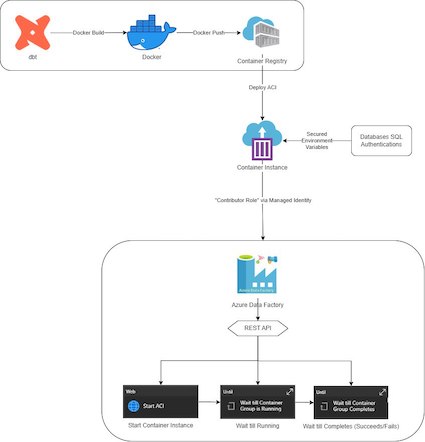

dbt Architecture

The project aimed to devise and establish a straightforward architecture supporting the deployment of dbt. This shift was intended to transition away from Alteryx as the primary ETL/ELT tool toward a more adaptable and resilient infrastructure that champions dbt for data transformation.

-

Container Registry (Containerisation)

Upon containerizing the dbt project into a Docker image, the image is stored within the Container Registry.

-

Container Instance

Deployment of the containerized application is accomplished via a singular instance of Azure Container Instances. Unlike continuous container operation, the container is activated solely during the execution of dbt runs. This approach ensures that the container remains inactive during periods of inactivity in dbt runs to save cost.

-

Data Factory (Orchestration & Monitoring)

Azure Data Factory operates as the orchestration tool, responsible for scheduling and executing the containerized dbt application. Triggers are utilized to initiate the container and commence the dbt runs.

Furthermore, the REST API is leveraged for monitoring the container’s status. This enables efficient tracking of the container’s state.